Tutorials

Time

8:30-10:00, 10:30-12:00, 8th July (Mo)

Room

3E

Abstract

The discovery of correlations from large scale of data set is an interested issue nowadays. Artificial intelligence is now heading towards how to integrate data-driven learning and knowledge-guided inference to perform better reasoning and decision instead of correlation learning via metric matching. This talk will discuss the potential ways to fuse symbolic AI, data-driven learning and reinforcement learning to support causal reasoning.Slides

Instructors

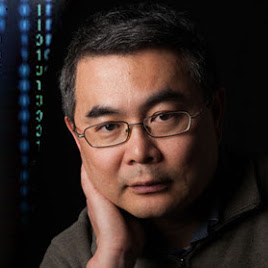

Fei Wu , Zhejiang University, Hangzhou, China

Fei Wu received his B.Sc., M.Sc. and Ph.D. degrees in computer science from Lanzhou University, University of Macau and Zhejiang University in 1996, 1999 and 2002 respectively. From October, 2009 to August 2010, Fei Wu was a visiting scholar at Prof. Bin Yu's group, University of California, Berkeley. Currently, He is a Qiushi distinguished professor of Zhejiang University at the college of computer science. He is the vice-dean of college of computer science, and the director of Institute of Artificial Intelligence of Zhejiang University. He is the chairman of IEEE CAS Hangzhou-Chapter since Oct, 2018. He is currently the Associate Editor of Multimedia System, the editorial members of Frontiers of Information Technology & Electronic Engineering. He has won various honors such as the Award of National Science Fund for Distinguished Young Scholars of China (2016). His research interests mainly include Artificial Intelligence, Multimedia Analysis and Retrieval and Machine Learning.

Time

13:30-15:00, 15:30-17:00, 8th July (Mo)

Room

3E

Abstract

Analyzing human behaviour in videos is one of the fundamental problems of computer vision and multimedia understanding. The task is very challenging as video is an information-intensive media with large variations and complexities in content. With the development of deep learning techniques, researchers have strived to push the limits of human behaviour understanding in a wide variety of applications from action recognition to event detection. This tutorial will present recent advances under the umbrella of human behavior understanding, which range from the fundamental problem of how to learn “good" video representations, to the challenges of categorizing video content into human action classes, finally to multimedia event detection and surveillance event detection in complex scenarios.Slides

- https://www.slideshare.net/ssuser2ff343/human-behavior-understanding-from-humanoriented-analysis-to-action-recognition-i

- https://www.slideshare.net/ssuser2ff343/human-behavior-understanding-from-humanoriented-analysis-to-action-recognition-ii

Instructors

Ting Yao , JD AI Research, Beijing, China

Ting Yao is currently a Principal Researcher in Vision and Multimedia Lab at JD AI Research, Beijing, China. His research interests include video understanding, large-scale multimedia search and deep learning. Prior to joining JD AI Research, he was a Researcher with Microsoft Research Asia in Beijing, China. Ting is an active participant of several benchmark evaluations. He is the principal designer of several top-performing multimedia analytic systems in worldwide competitions such as COCO Image Captioning, Visual Domain Adaptation Challenge 2017, ActivityNet Large Scale Activity Recognition Challenge 2018, 2017 and 2016, THUMOS Action Recognition Challenge 2015, and MSR-Bing Image Retrieval Challenge 2014 and 2013. He is one of the organizers of the MSR Video to Language Challenge 2017 and 2016. For his contributions to Multimedia Search by Self, External and Crowdsourcing Knowledge, he was awarded the 2015 SIGMM Outstanding Ph.D. Thesis Award.

Wu Liu , JD AI Research, China

Wu Liu is a Senior Researcher in JD AI Research, China. He received his Ph.D. degree from the Institute of Computing Technology, Chinese Academy of Science in 2015. His current research interests include video analytics, human behavior analysis, and intelligent video surveillance. He has published more than 30 papers in prestigious conferences and journals in computer vision and multimedia, including CVPR, ACM MM, IJCAI, AAAI, UBICOMP, IEEE T-MM, IEEE T-CYB, etc. He received Chinese Academy of Sciences Outstanding Ph.D. Thesis Award in 2016, Best Student Paper Awards at ICME in 2016, and the Deans Special Award of Chinese Academy of Sciences in 2015, etc. He is also the founding member of ACM FCA, the guest editor of MTAP and MVA, and the Web Chair of ICME 2019.

Time

8:30-10:00, 10:30-12:00, 8th July (Mo)

Room

3G

Website

https://flyywh.github.io/ICME_Tutorial_2019/icme_tutorial.htmlAbstract

Intelligent image/video editing is a fundamental topic in image processing which has witnessed rapid progress in the last two decades. Due to various degradations in the image and video capturing, transmission and storage, image and video include many undesirable effects, such as low resolution, low light condition, rain streak and rain drop occlusions. The recovery of these degradations is ill-posed. With the wealth of statistic-based methods and learning-based methods, this problem can be unified into the cross-domain transfer, which cover more tasks, such as image stylization.

In our tutorial, we will discuss recent progresses of image stylization, rain streak/drop removal, image/video super-resolution, and low light image enhancement. This tutorial covers both traditional statistics based and deep-learning based methods, and contains both biological-driven model, i.e. Retinex model, and data-driven model. An image processing viewpoint that considers the popular deep networks as a traditional Maximum-a-Posteriori (MAP) Estimation is provided. The side priors, designed by researchers and learned by multi-task learnings, and automatically learned priors, captures by adversarial learning are two kinds of important priors in this framework. Three works under this framework, including single image super-resolution, low light image enhancement, and single image raindrop removal are presented.

Single image super-resolution is a classical problem in computer vision. It aims at recovering a high-resolution image from a single low-resolution image. This problem is an underdetermined inverse problem, of which solution is not unique. In this tutorial, we will discuss how we can solve the problem by deep convolutional networks in a data-driven manner. We will review different model variants and important techniques such as adversarial learning for image super-resolution. We will then discuss recent work on hallucinating faces of unconstrained poses and with very low resolution. Finally, the tutorial will discuss challenges of implementing image super-resolution in real-world scenarios.

Slides

- https://www.slideshare.net/ssuser2ff343/intelligent-image-enhancement-and-restoration-from-prior-driven-model-to-advanced-deep-learning-part-1-prior-embedding-deep-rain-removal

- https://www.slideshare.net/ssuser2ff343/intelligent-image-enhancement-and-restoration-from-prior-driven-model-to-advanced-deep-learning-part-2-text-centric-image-style-transfer

- https://www.slideshare.net/ssuser2ff343/intelligent-image-enhancement-and-restoration-from-prior-driven-model-to-advanced-deep-learning-part-3-prior-embedding-deep-super-resolution

- https://www.slideshare.net/ssuser2ff343/intelligent-image-enhancement-and-restoration-from-prior-driven-model-to-advanced-deep-learning-part-4-retinex-model-based-low-light-enhancement

Instructors

Jiaying Liu , Peking University, Beijing, China

Jiaying Liu is currently an Associate Professor with the Institute of Computer Science and Technology, Peking University. She received the Ph.D. degree (Hons.) in computer science from Peking University, Beijing China, 2010. She has authored over 100 technical articles in refereed journals and proceedings, and holds 34 granted patents. Her current research interests include multimedia signal processing, compression, and computer vision.

Dr. Liu is a Senior Member of IEEE and CCF. She was a Visiting Scholar with the University of Southern California, Los Angeles, from 2007 to 2008. She was a Visiting Researcher with the Microsoft Research Asia in 2015 supported by the Star Track Young Faculties Award. She has served as a member of Multimedia Systems & Applications Technical Committee (MSA-TC), Visual Signal Processing and Communications Technical Committee (VSPC) and Education and Outreach Technical Committee (EO-TC) in IEEE Circuits and Systems Society, a member of the Image, Video, and Multimedia (IVM) Technical Committee in APSIPA. She has also served as the Technical Program Chair of IEEE VCIP-2019/ACM ICMR-2021, the Publicity Chair of IEEE ICIP-2019/VCIP-2018, the Grand Challenge Chair of IEEE ICME-2019, and the Area Chair of ICCV-2019. She was the APSIPA Distinguished Lecturer (2016-2017).

In addition, Dr. Liu also devotes herself to teaching. She has run MOOC Programming Courses via Coursera/edX/ChineseMOOCs, which have been enrolled by more than 60 thousand students. She is also the organizer of the first Chinese MOOC Specialization in Computer Science. She is the youngest recipient of Peking University Outstanding Teaching Award.

Wenhan Yang , National University of Singapore, Singapore

Wenhan Yang is a Postdoc research fellow with the Department of Computer Science, City University of Hong Kong. Wenhan Yang received the B.S degree and Ph.D. degree (Hons.) in computer science from Peking University, Beijing, China, in 2012 and 2018. Dr. Yang was a Visiting Scholar with the National University of Singapore, from 2015 to 2016. He has authored over 30 technical articles in refereed journals and proceedings. His current research interests include deep-learning based image processing, bad weather restoration, related applications and theories.

Chen Change Loy , Nanyang Technological University, Singapore

Chen Change Loy is a Nanyang Associate Professor with the School of Computer Science and Engineering, Nanyang Technological University, Singapore. He is also an Adjunct Associate Professor at the Chinese University of Hong Kong. He received his PhD (2010) in Computer Science from the Queen Mary University of London. Prior to joining NTU, he served as a Research Assistant Professor at the MMLab of the Chinese University of Hong Kong, from 2013 to 2018. He is the recipient of 2019 Nanyang Associate Professorship (Early Career Award) from Nanyang Technological University.

His research interests include computer vision and deep learning, with a focus on face analysis, image processing, and visual surveillance. He has published more than 100 papers in top journals and conferences of computer vision and machine learning. He and his team proposed a number of important methods for image super-resolution including SRCNN, SFTGAN and ESRGAN. As a co-author, his journal paper on SRCNN was selected as the `Most Popular Article' by IEEE Transactions on Pattern Analysis and Machine Intelligence in 2016. It remains as one of the top 10 articles to date. ESRGAN has been widely used to remaster various classic games such as Half-Life, Resident Evil 2, Morrowind, and Final Fantasy 7.

He serves as an Associate Editor of the International Journal of Computer Vision (IJCV) and IET Computer Vision Journal. He also serves/served as the Area Chair of CVPR 2019, BMVC 2019, ECCV 2018, and BMVC 2018. He is a senior member of IEEE.

Time

13:30-15:00, 15:30-17:00, 8th July (Mo)

Room

3G

Abstract

Personal photo and video data are being accumulated at an unprecedented speed. For example, 14 petabytes of personal photos and videos were uploaded to Google Photo1 by 200 million users in 2015, while a tremendous amount of personal photos and videos are also being uploaded to Flickr every day. How to efficiently search and organize such data presents a huge challenge to both academic research and industrial applications.

To attack this challenge, this tutorial will review the research efforts in related subjects and showcases of successful industrial systems. We will discuss traditional visual search methods and the improvement of visual presentations brought by deep neural networks. The instructors will also share their experience of building large-scale fashion search and Flickr similarity search systems and bring insights on the challenges of extending the academic research to industrial applications.

This tutorial will discuss the queries and logs of search engines, and analyze how to address the characteristics of personal media search. By leveraging searching techniques to visual question answering, this tutorial will introduce a new task named MemexQA: given a collection of photos or videos from the user, can we automatically answer questions that help users recover their memory about events captured in the collection? New datasets and algorithms of MemexQA will be reviewed. We hope MemexQA will shed light on the next generation computer interface of exploding amount of personal photos and videos.

Slides

Instructors

Lu Jiang , Google Cloud AI, Sunnyvale, CA, USA

Lu Jiang is a research scientist at Google CLoudAI, advised by Dr. Jia Li and Dr. Fei-Fei Li. He received his Ph.D. in Artificial Intelligence (Language Technology) from the Carnegie Mellon University in 2017, advised by Dr. Alexander Hauptmann and Dr. Teruko Mitamura. Dr. Tat-Seng Chua and Dr. Louis-Philippe Morency are his thesis advisors. He was an intern scientist in Yahoo Research during the 2016 summer, working with Dr. Liangliang Cao, Dr. Yannis Kalantidis and Sachin Farfade on the personal photo and video search on Flickr. Prior to that, he was an intern in Google Research working with Dr. Paul Natsev and Dr. Balakrishnan Varadarajan on large-scale deep learning on the noisy YouTube-8M dataset. He interned at Microsoft Research Asia in 2010, working with Dr. Qiang Wang and Dr. Dongmei Zhang on data mining. Before that, he was a research assistant at Xi'an Jiaotong University, supervised by Dr. Jun Liu on text mining, information retrieval. Lu's primary interests lie in the interdisciplinary field of Multimedia, Machine Learning, Computer Vision, Information Retrieval, which, specifically, include video understanding and search, weakly supervised learning, deep learning, cloud machine learning, etc. He regularly serves on the programme committee of premier conferences such as ACM Multimedia, AAAI, and IJCAI. Lu is the recipient of the Yahoo Fellowship, Erasmus Mundus Scholar. He received the best poster award at IEEE Spoken Language Technology and the best Paper nomination at ACM International Conference on Multimedia Retrieval.

Liangliang Cao , University of Massachusetts, Amherst, MA, USA

Liangliang Cao is a Staff Research Scientist at Google. He is also affiliated with UMass CICS as a research associate professor. His research interests include AI and large scale data learning, spanning computer vision, language, and speech. Before joining Google, he worked as a co-founder of HelloVera, and earlier a senior scientist at Yahoo Labs and a research staff member at IBM Watson Research Center. He is an associate editor of the Visual Computer and JVIS. He won the 1st place of ImageNet LSVRC Challenge in 2010. He is a recipient of ACM SIGMM Rising Star Award.

Yannis Kalantidis , Facebook Research, Menlo Park, California, USA

Yannis Kalantidis is a research scientist at Facebook AI in Menlo Park, California. He grew up in Athens, Greece and lived there till 2015, with brief breaks in Sweden, Spain and the United States. He got his PhD on large-scale search and clustering from the National Technical University of Athens in 2014. He was a postdoc and research scientist at Yahoo Research in San Francisco for two years, leading the visual similarity search project at Flickr and participated in the Visual Genome dataset efforts with Stanford. He is currently conducting research on video understanding, representation learning and modeling of vision and language.

Time

13:30-15:00, 15:30-17:00, 8th July (Mo)

Room

5A

Abstract

Computer vision in transportation has recently received increasing attention from both industry and academia due to the popularity of modern mobile transportation platforms and the rapid development of autonomous driving. In this tutorial, we systematically introduce the recent progresses of computer vision techniques and their applications in transportation. Specifically, we will provide a general overview of the key problems, common formulations, existing methodologies and future directions. This tutorial will inspire the audience and facilitate research in computer vision for transportation.

The tutorial mainly consists of three parts:

Lecture 1: Challenges using object recognition, optical character recognition and face recognition in transportation.

- The recent progresses of the object recognition, optical character recognition and face recognition technologies.

- The difficulties and problems when applying these technologies in transportation.

- The solutions and applications.

Lecture 2: Towards Driving Scenario Understanding.

- Object detection, tracking, and segmentation in driving scenarios.

- Vision based 3D reconstruction of driving scenario.

- Driving behavior modeling and safety risk analysis.

Lecture 3: Applying transfer learning in CV.

- The introduction of the recent transfer learning technologies.

- The applications of the transfer learning technologies in CV.

Slides

Instructors

Haifeng Shen , AI Labs, Didi Chuxing, China

Haifeng Shen is a senior expert algorithm engineer in Didi Chuxing and leads the computer vision group in the AI Labs. He received his Ph.D. degree in signal and information processing from Beijing University of Posts and Telecommunications, in 2006. He has worked at Panasonic, Baidu, and Microsoft. He built the first speech recognition interface for XiaoIce chatbot in Microsoft and his current work focuses on computer vision in transportation. His research interests include computer vision, speech recognition and natural language processing.

Zhengping Che , AI Labs, Didi Chuxing

Zhengping Che is a senior research scientist at DiDi AI Labs. He received his Ph.D. in Computer Science from the University of Southern California. Before that, he received his B.E. in Computer Science from Pilot CS Class (Yao Class), Tsinghua University. His current research interests lie in machine learning, deep learning and data mining with applications to temporal data and vision data. He has published several papers in ICML, KDD, ICDM, AMIA and other venues and interned at DiDi AI Labs, Mayo Clinic, IBM Research, Google and Hulu.

Guangyu Li , AI Labs, Didi Chuxing

Guangyu Li is a senior research scientist at DiDi AI Labs. In this role, he works on intelligent vehicles regarding autonomous driving, intelligent cockpit, and IoT systems. Before that, he developed perception algorithms for self-driving trucks at TuSimple, an autonomous truck unicorn. Besides industrial experience, he is also a PhD candidate in University of Southern California. His research interests lie in computer vision, large scale sensor systems, and virtual/augmented/mixed reality with a focus on their applications in modern intelligent transportation.

Yuhong Guo , AI Labs, Didi Chuxing & Carleton University

Yuhong Guo is a principal research scientist at Didi Chuxing. She is also an associate professor at Carleton University, a faculty affiliate of the Vector Institute, and a Canada Research Chair in Machine Learning. She received her PhD from the University of Alberta, and has previously worked at the Australian National University and Temple University. Her research interests include machine learning, artificial intelligence, computer vision, and natural language processing. She has won paper awards from both IJCAI and AAAI. She has served in the Senior Program Committees of AAAI, IJCAI and ACML, and is currently serving as an Associate Editor for TPAMI.

Jieping Ye , Didi Chuxing & University of Michigan, Ann Arbor

Jieping Ye is head of DiDi AI Labs, a VP of Didi Chuxing. He is also an associate professor of University of Michigan, Ann Arbor. His research interests include big data, machine learning, and data mining with applications in transportation and biomedicine. He has served as a Senior Program Committee/Area Chair/Program Committee Vice Chair of many conferences including NIPS, ICML, KDD, IJCAI, ICDM, and SDM. He has served as an Associate Editor of Data Mining and Knowledge Discovery, IEEE Transactions on Knowledge and Data Engineering, and IEEE Transactions on Pattern Analysis and Machine Intelligence. He won the NSF CAREER Award in 2010. His papers have been selected for the outstanding student paper at ICML in 2004, the KDD best research paper runner up in 2013, and the KDD best student paper award in 2014.

Time

8:30-10:00, 10:30-12:00, 8th July (Mo)

Room

5A

Abstract

Object detection is a fundamental problem in the computer vision society with numerous applications. Recently, as the development of Mask R-CNN and RetinaNet, the pipeline of the object detection seems to be mature. However, the performance for the current state-of-art object detection is still far from the requirements from the visual applications. In this tutorial, we will delve into the details of the object detection and present the improvements from the following aspects: backbone, head, pretraining, scale, batchsize, post-processing, Neural Architecture Search (NAS) and fine-grained image analysis (FGIA). In particular, NAS and FGIA will be highlighted, since they are two important directions for visual object detection in the future.

The tutorial consists the following three parts:

Lecture 1: Beyond Mask R-CNN and RetinaNet

Lecture 2: AutoML for Object Detection

Lecture 3: Fine-Grained Image Analysis

Slides

- https://www.slideshare.net/ssuser2ff343/object-detection-beyond-mask-rcnn-and-retinanet-i

- https://www.slideshare.net/ssuser2ff343/object-detection-beyond-mask-rcnn-and-retinanet-ii

- https://www.slideshare.net/ssuser2ff343/object-detection-beyond-mask-rcnn-and-retinanet-iii

Instructors

Gang Yu , MEGVII, Beijing, China

Gang YU is the team leader for the detection in MEGVII. He graduated from Nanyang Technological University, Singapore, in 2014. He then joined MEGVII and his research interest focuses on computer vision and machine learning, including object detection, segmentation, skeleton, and human action analysis. Gang Yu has obtained the winners of COCO2017 and COCO2018 detection challenge and Keypoint challenge.

Xiangyu Zhang , MEGVII, Beijing, China

Xiangyu Zhang is currently the team leader of base model group in MEGVII Research. He received his doctoral degree from Xi’an Jiaotong University in 2017 and then joined MEGVII Technology. His research interest mainly focuses on deep learning models for computer vision, including CNN architecture design, network pruning and acceleration, neural architecture search and object detection/segmentation. He got CVPR 2016 best paper award and won a series of vision competitions such as ILSVRC 2015, COCO 2015/2017/2018. The total number of his Google Scholar citations is over 30000.

Xiu-Shen Wei , MEGVII, Nanjing, China

Xiu-Shen Wei received his BS degree in computer science, and his Ph.D. degree in computer science and technology from Nanjing University. He is now the Research Lead of Megvii Research Nanjing, Megvii Technology, China. He has published about twenty academic papers on the top-tier international journals and conferences, such as IEEE TPAMI, IEEE TIP, IEEE TNNLS, Machine Learning Journal, CVPR, ICCV, IJCAI, ICDM, ACCV, etc. He achieved the first place in the Apparent Personality Analysis competition (in association with ECCV 2016), the first place in the iNaturalist competition (in association with CVPR 2019) and the first runner-up in the Cultural Event Recognition competition (in association with ICCV 2015) as the team director. He also received the Presidential Special Scholarship (the highest honor for Ph.D. students) in Nanjing University, and received the Outstanding Reviewer Award in CVPR 2017. His research interests are computer vision and machine learning. He has served as a PC member of ICCV, CVPR, ECCV, NIPS, IJCAI, AAAI, etc. He is a member of the IEEE.Time

8:30-10:00, 10:30-12:00, 12th July (Fr)

Room

5BC

Abstract

Owing to the popularity of Big Data, abundant multimedia data are accumulated in various domains. At the same time, many machine learning methods are proposed to exploit these data for prediction. These methods have been proved to be successful in prediction-oriented applications. However, the lack of interpretability of most predictive algorithms makes them less attractive in many settings, especially those requiring decision making. How to improve the interpretability of learning algorithms is of paramount importance for both academic research and real applications.

Causal inference, which refers to the process of drawing a conclusion about a causal connection based on the conditions of the occurrence of an effect, is a powerful statistical modeling tool for explanatory analysis. In this tutorial, we focus on causally regularized machine learning, aiming to explore causal knowledge from observational data to improve the explainability and stability of machine learning algorithms. First, we will give some examples on how machine learning algorithms today focus on correlation analysis and prediction, and why those methods are not insufficient for decision making. Then, we will give introduction to causal inference and introduce some recent data-driven approaches to explore causal knowledge from observational data, especially in high dimensional setting. Aiming to bridge the gap between causal inference and machine learning, we will introduce some recently causally regularized machine learning algorithms for improving the stability and interpretability of prediction on multimedia data. Finally, we will discussing future directions of the landscape of open research and challenges in machine learning with causal inference.

Slides

Instructors

Peng Cui , Tsinghua University, Beijing, China

Peng Cui is an Associate Professor in Tsinghua University. He got his PhD degree from Tsinghua University in 2010. His research interests include network representation learning, social dynamics modeling and human behavior modeling. He has published more than 60 papers in prestigious conferences and journals in data mining and multimedia. His recent research won the ICDM 2015 Best Student Paper Award, SIGKDD 2014 Best Paper Finalist, IEEE ICME 2014 Best Paper Award, ACM MM12 Grand Challenge Multimodal Award, and MMM13 Best Paper Award. He is the Area Chair of ICDM 2016, ACM MM 2014-2015, IEEE ICME 2014-2015, ICASSP 2013, Associate Editor of IEEE TKDE, ACM TOMM, Elsevier Journal on Neurocomputing. He was the recipient of ACM China Rising Star Award in 2015.

Kun Kuang , Tsinghua University, Beijing, China

Kun Kuang received the B.E. degree from the Department of Computer Science and technology of Beijing Institute of Technology in 2014. He is a fifth- year Ph.D. candidate in the Department of Computer Science and Technology of Tsinghua University. His main research interests including data mining, high dimensional inference and data driven causal model. He has published several papers on data-driven causal inference and high dimensional inference in top data mining and machine learning conferences/journals of the relevant field such as SIGKDD, AAAI, and ICDM etc.

Bo Li , Tsinghua University, Beijing, China

Bo Li received a Ph.D degree in Statistics from the University of California, Berkeley, and a bachelor's degree in Mathematics from Peking University. He is an Associate Professor at the School of Economics and Management, Tsinghua University. His research interests are statistical methods for high-dimensional data, statistical causal inference and data-driven decision making. He has published widely in academic journals across a range of fields including statistics, management science and economics.

Time

8:30-10:00, 10:30-12:00, 12th July (Fr)

Room

5DE

Abstract

Recent years have witnessed great success in the deployment of deep learning for various tasks. Neural architecture innovation plays an important role in advancing this research direction. From AlexNet and VGG to ResNet and DenseNet, better architecture design has pushed the depth limit of deep models from 7 layers to over one thousand layers. The unprecedent depth endows neural networks with strong representation power.

This tutorial will review classical convolutional network architectures, discuss their underlying design principles, and analyze their strengths and weaknesses. Particularly, we will address the recent trend of developing highly efficient light-weighted deep models for practical applications with limited computational resources, e.g., mobile phones and wearable devices. Besides hand designed structures that incorporate human intuition, neural architectures obtained via automatic search have gained great popularity in the recent two years. This newly emerged research direction, usually referred as AutoML, will also be covered in this tutorial.

Slides

- https://www.slideshare.net/ssuser2ff343/architecture-design-for-deep-neural-networks-i

- https://www.slideshare.net/ssuser2ff343/architecture-design-for-deep-neural-networks-166489354

- https://www.slideshare.net/ssuser2ff343/architecture-design-for-deep-neural-networks-iii

Instructors

Gao Huang , Tsinghua University, Beijing, China

Gao Huang is an Assistant Professor with the Department of Automation at Tsinghua University. Previously, he was a postdoc with the Department of Computer Science at Cornell University. His research interests lie in machine learning and computer vision, with a special focus on deep learning. He has authored more than 30 papers, which collect more than 6000 citations. He is a recipient of the CVPR Best Paper Award (DenseNet), CAA Doctoral Dissertation Award and the Super AI Leader - Pioneer Award.

Jingdong Wang , MSRA, Beijing, China

Jingdong Wang is a Senior Researcher with the Visual Computing Group, Microsoft Research, Beijing, China. His areas of current interest include CNN architecture design, human pose estimation, semantic segmentation, person re-identification, large-scale indexing, and salient object detection. He has authored one book and 100+ papers in top conferences and prestigious international journals in computer vision, multimedia, and machine learning. He authored a comprehensive survey on learning to hash in TPAMI. His paper was selected into the Best Paper Finalist at ACM MM 2015. Dr. Wang is an Associate Editor of IEEE TPAMI, IEEE TCSVT and IEEE TMM. He was an Area Chair or a Senior Program Committee Member of top conferences, such as CVPR, ICCV, ECCV, AAAI, IJCAI, and ACM Multimedia. He is an ACM Distinguished Member and a Fellow of the IAPR. His homepage is https://jingdongwang2017.github.io/.

His representative works include deep high-resolution representation learning (HRNet), interleaved group convolutions, supervised saliency detection (discriminative regional feature integration, DRFI), neighborhood graph search (NGS) for large scale similarity search, composite quantization for compact coding, the Market-1501 dataset for person re-identification, and so on. He has shipped a dozen of technologies to Microsoft products, including Bing search, Bing Ads, Cognitive service, and XiaoIce Chatbot. His NGS algorithm is a foundational element of many products. He has developed Bing image search color filter using his efficient salient object algorithm. He has developed the first commercial color-sketch image search system.

Lingxi Xie , Huawei Inc., Beijing, China

Lingxi Xie is currently a senior researcher at Noah's Ark Lab, Huawei Inc. He obtained B.E. and Ph.D. in engineering, both from Tsinghua University, in 2010 and 2015, respectively. He also served as a post-doctoral researcher at the CCVL lab from 2015 to 2019, having moved from the University of California, Los Angeles to the Johns Hopkins University. His homepage is http://lingxixie.com/.

Lingxi's research interests lie in computer vision, in particular the application of deep learning models. His research covers image classification, object detection, semantic segmentation and other vision tasks. He is also interested in medical image analysis, especially object segmentation in CT or MRI scans. Lingxi has published over 40 papers in top-tier international conferences and journals. In 2015, he received the outstanding Ph.D. thesis award from Tsinghua University. He is also the winner of the best paper award at ICMR 2015.

Cancel

Time

8:30-10:00, 10:30-12:00, 12th July (Fr)

Room

5DE

Abstract

Deep learning has been successfully explored in addressing different multimedia topics in recent years, ranging from object detection, semantic classification, entity annotation, to multimedia captioning, multimedia question answering and storytelling. The academic researchers have now transferring their attention from identifying what problem deep learning can address to exploring what problem deep learning can NOT address. This tutorial starts with a summarization of six NOT problems deep learning fails to solve in the current stage, i.e., low stability, debugging difficulty, poor parameter transparency, poor incrementality, poor reasoning ability, and machine bias. These problems share a common origin from the lack of deep learning interpretation. This tutorial attempts to correspond the six 'NOT' problems to three levels of deep learning interpretation: (1) Locating, accurately and efficiently locating which feature contributes much to the output. (2) Understanding, bidirectional semantic accessing between human knowledge and deep learning algorithm. (3) Accumulation, well storing, accumulating and reusing the models learned from deep learning. Existing studies falling into these three levels will be reviewed in detail, before a discussion on the future interesting directions in the end.Instructors

Jitao Sang , Beijing Jiatong University, Beijing, China

Jitao Sang is a full Professor in Beijing Jiaotong University. He graduated his PhD from Chinese Academy of Science (CAS) with the highest honor, the special prize of CAS president scholarship. His research interest is in multimedia data mining and machine learning, with award-winning publications in the prestigious multimedia conferences (best paper in PCM 2016, best paper finalist in MM2012 and MM2013, best student paper in MMM2013, best student paper in ICMR2015). He is the recipient of ACM China Rising Star in 2016. So far, he has authored one book, co-authored more than 60 peer-referenced papers in multimedia-related journals and conferences. He is program co-chair in PCM 2015 and ICIMCS 2015, area chair in ACM MM 2018 and ICPR 2018. He is guest editor in journals such as MMSJ and MTA. He is tutorial speaker at ACM MM 2014/2015/2018, MMM 2015, ICME 2015 and ICMR 2015.

Time

13:30-15:00, 15:30-18:00, 12th July (Fr)

Room

5DE

Abstract

Due to the rapid growth of multimedia big data and related novel applications, intelligent recommendation systems have become more and more important in our daily life. During last decades, various multimedia technologies have been developed by different research communities (e.g., multimedia systems, information retrieval, and machine learning). Meanwhile, recommendation techniques have been successfully leveraged by commercial systems (e.g., Amazon, Youtube and Spotify) to assist general users to deal with information overload and provide them high quality contents, interactions and services.

While several tutorials and courses were dedicated to multimedia recommendation in the last few years, to the best of our knowledge, this tutorial should be the advanced and comprehensive one focusing on intelligent content analytics and its core applications on recommending various types of media contents. We plan to summarize the research along this direction and provide a good balance between theoretical methodologies and real system development (including several industrial approaches). Core contributions to literature largely include:

- Introducing why advanced recommendation system is important for Web scale multimedia retrieval, understanding and sharing.

- Examining current commercial systems and research prototypes, focusing on comparing the advantages and the disadvantages of the various strategies and schemes for different types of media documents (e.g., image, video, audio and text) and their composition.

- Reviewing key challenges and technical issues in building and evaluating modern recommendation systems under different contexts.

- Discussing and reviewing various limitations of the current generation of systems.

- Make predictions about the road that lies ahead for the scholarly exploration and industrial practice in multimedia and other related communities.

We also plan to have open discussion in this tutorial on several promising research directions with significant technical importance and explore potential solutions. Thus, we hope that this study provides an impetus for further research on this important direction.

Slides

Instructors

Jialie Shen , Queen's University Belfast, Belfast, United Kingdom

Jialie Shen is a Reader in Computer Science, School of Electronics, Electrical Engineering and Computer Science, Queen’s University Belfast (QUB), Belfast, United Kingdom. He received his PhD in Computer Science from the University of New South Wales (UNSW), Australia in the area of large-scale media retrieval and database access methods. Dr. Shen worked as a faculty member at Hong Kong, Singapore, Australia and England and researcher at information retrieval research group (Led by Professor Keith van Rijsbergen), the University of Glasgow, Scotland before moving to the QUB. Dr. Shen's main research interests include information retrieval, machine learning, multimedia systems and audio/video analytics. His research has been published or is forthcoming in leading journals and international conferences, including ACM SIGIR, ACM Multimedia, IJCAI, AAAI, IEEE Transactions and ACM Transactions.

Jian Zhang , University of Technology Sydney, Sydney, Australia

Jian Zhang is a Associate Professor in School of Electrical & Data Engineering, University of Technology Sydney, Australia. He received a PhD in electrical engineering from the University of New South Wales (UNSW), Sydney, Australia in area of image processing and video communication. From 1997 to 2003, he was with the Visual Information Processing Laboratory, Motorola Labs, Sydney, as a Principal Research Engineer and Research Manager of Visual Communications. From 2004 to July 2011, he was a Principal Researcher and a Project Leader with Data61 (formerly INCTA) Australia and a Conjoint Associate Professor with the School of Computer Science and Engineering, UNSW. He is currently an Associate Professor with the Global Big Data Technologies Centre, School of Electrical and Data Engineering, Faculty of Engineering and Information Technology, University of Technology Sydney, Sydney. He is the author or co-author of more than 150 paper publications, book chapters, and six issued US and China patents. His current research interests include social multimedia signal processing, large scale image and video content analytics, retrieval and mining, 3D based computer Vision and intelligent video surveillance systems.

Dr. Zhang was the General Co-Chair of the International Conference on Multimedia and Expo in 2012 and Technical Program Co-Chair of IEEE Visual Communications and Image Processing 2014. Currently, he is an Associated Editors for the IEEE TRANSACTIONS ON MULTIMEDIA and the EURASIP Journal on Image and Video Processing (2016 – now). He was an Associate Editor for the IEEE TRANSCTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY (2006 – 2015).

Tutorial Chairs